The ADUULM-Dataset - A Semantic Segmentation Dataset for Sensor Fusion

This BMVC publication of my colleagues and me attempts to close the gap that existed for annotated datasets in the context of autonomous driving in adverse weather and compromised lighting. It features multitudes of challenging real-world driving scenarios in and around the city of Ulm-Germany and was recorded during the course of several seasons. It includes sequences with heavy fog, snow rain and also captures various lighting conditions which are challenging for modern vehicular sensor setups such as glaring and blooming effects as well as recordings at different daylight conditions. The annotations comprise 12 semantic classes (car, truck, bus, motorbike, pedestrian, bicyclist, traffic-sign, traffic-light, road, sidewalk, pole, unlabeled) and can be used for both semantic segmentation approaches as well as free-space detection methods.

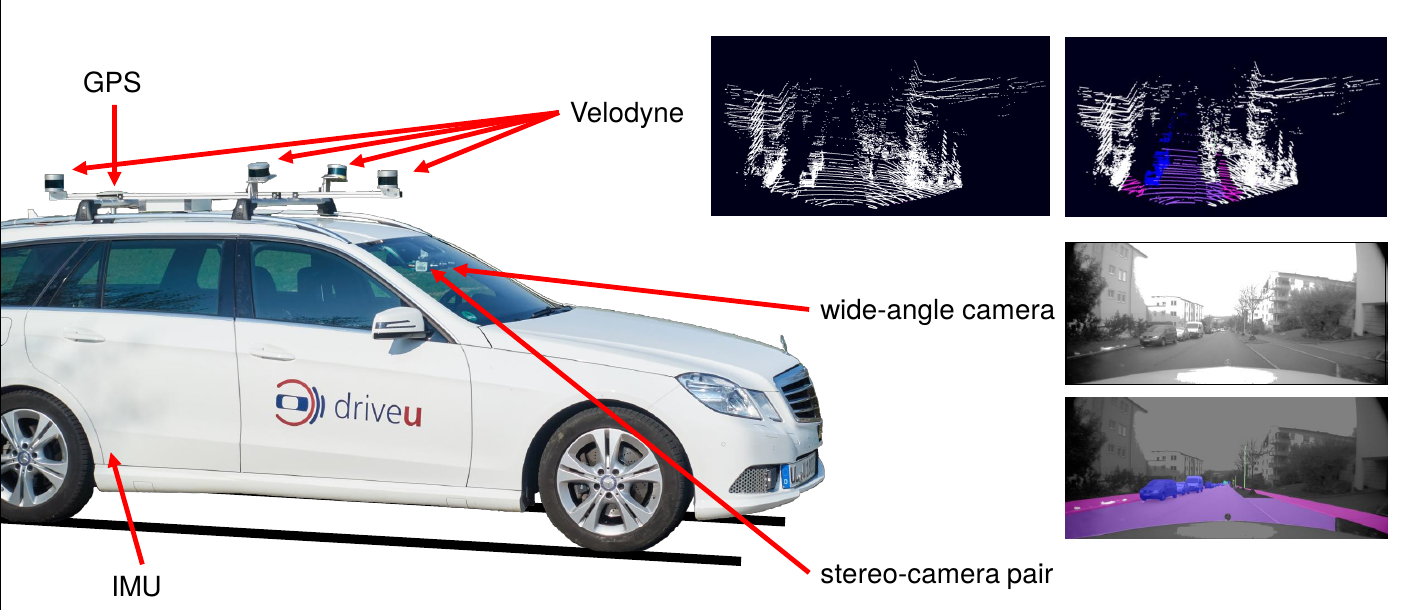

Sensor setup:

- Camera (telephoto lens, wide-angle lens),

- Lidars (16/32 lines),

- Stereo Camera footage,

- GPS data,

- IMU recordings

Please find further detailed information of this amazing work of my office mate Andreas and colleague Markus and download links on the project page of out department and github.

Sensor setup on the autonomous recording vehicle used to record the ADUULM dataset

Sensor setup on the autonomous recording vehicle used to record the ADUULM dataset

If you intend to cite the paper, please use the following Bibtex entry

@InProceedings{Pfeuffer_2020_TheADUULM-Dataset,

Title = {The ADUULM-Dataset - A Semantic Segmentation Dataset for Sensor Fusion},

Author = {Pfeuffer, Andreas and Sch{\"o}n, Markus and Ditzel, Carsten and Dietmayer, Klaus},

Booktitle= {31th British Machine Vision Conference 2020, {BMVC} 2020, Manchester, UK, September 7-10, 2020},

Year= {2020},

Publisher= {BMVA Press}

}